Announcing the UiPath AI Trust Layer: Responsibly manage Generative AI

At FORWARD VI, we announced new capabilities to help make all users more productive such as UiPath Autopilot™ powered by Generative AI in the UiPath Business Automation Platform. We also announced the upcoming availability of the UiPath AI Trust Layer to assure our customers that we are diligently safeguarding their data as they use our new Generative AI experiences. The UiPath AI Trust Layer will extend the same level of security and control that UiPath has provided across all its offerings and AI-based services to this next generation of Generative AI-powered automation.

The importance of an AI data policy for the enterprise

The accessibility of Generative AI models has expanded dramatically in 2023. Chat interfaces made it easy for anyone to engage with AI via natural language-based prompts broadening the applicability of AI to an unprecedented range of new use cases. API connectors and large language model (LLM) frameworks (e.g., Langchain) allowed users to build new applications harnessing the power of AI. However, this ubiquitous access also reinforces some of the persistent concerns related to the use of Generative AI and LLMs:

Data privacy and security:

Where is my data being sent?

Will the privacy and security of my data be protected?

Are my prompts and responses compliant with my company’s regulatory standards?

Data and developer permissions for Generative AI development:

Is my data being used to train general third-party models?

Do the right developers have access to these Generative AI features?

Will these models make my data available to my competitors for their development purposes?

Before we dive into the additional capabilities provided by UiPath AI Trust Layer itself lets recap the UiPath enterprise polices regarding customer data storage, transmission and use for training of third party LLMs.

Is my data being used to train third-party LLMs?

UiPath has agreements with all its third-party data sub-processors—including LLM providers—and prohibits the sub-processors to use customer data passed through the UiPath Platform to be used for model training.

Where is my data being sent and how is it being used with third-party LLMs?

Data that is used as context for prompts will be securely sent by UiPath only to third-party LLMs in the UiPath trusted ecosystem for the purpose of executing the relevant prompt-response task.

Where is my data being stored and processed when used with third-party LLMs?

Enterprise customers can choose the region of tenants when creating them, and associated product use by users in those tenants will be processed in that region. The location of third-party sub-processors data processing is disclosed on the UiPath Trust and Security site. Your data will remain inside the established UiPath “trust boundary” and no data is stored outside of that boundary by third-party models. Additional details can be found in UiPath Automation Cloud Data Residency.

You can find answers to additional questions you may have on our Trust and Security Site.

We recognize that these answers alone may not be enough: customers want to see this governance in action. The creation of proper training programs and process protocols is fundamental to ensure AI objectives align with company ethical and compliance guidelines. Generative AI technology providers like UiPath must also ensure that for any software which utilizes AI to improve in-product experiences, power platform features, or be trained for custom use cases, there is:

A clear understanding of how and where customer data is being used with these models

Governance over the lifecycle of data used with AI models, and assurances around its security and integrity

Confidence that model predictions are reliable, accurate, secure, and explainable

Adhering to these principles ensures that any application using the output from these models is not adversely affected. As AI becomes more of a standard component of software, it’s increasingly important that technology providers integrate these AI administrative capabilities into their platforms.

UiPath AI Trust Layer

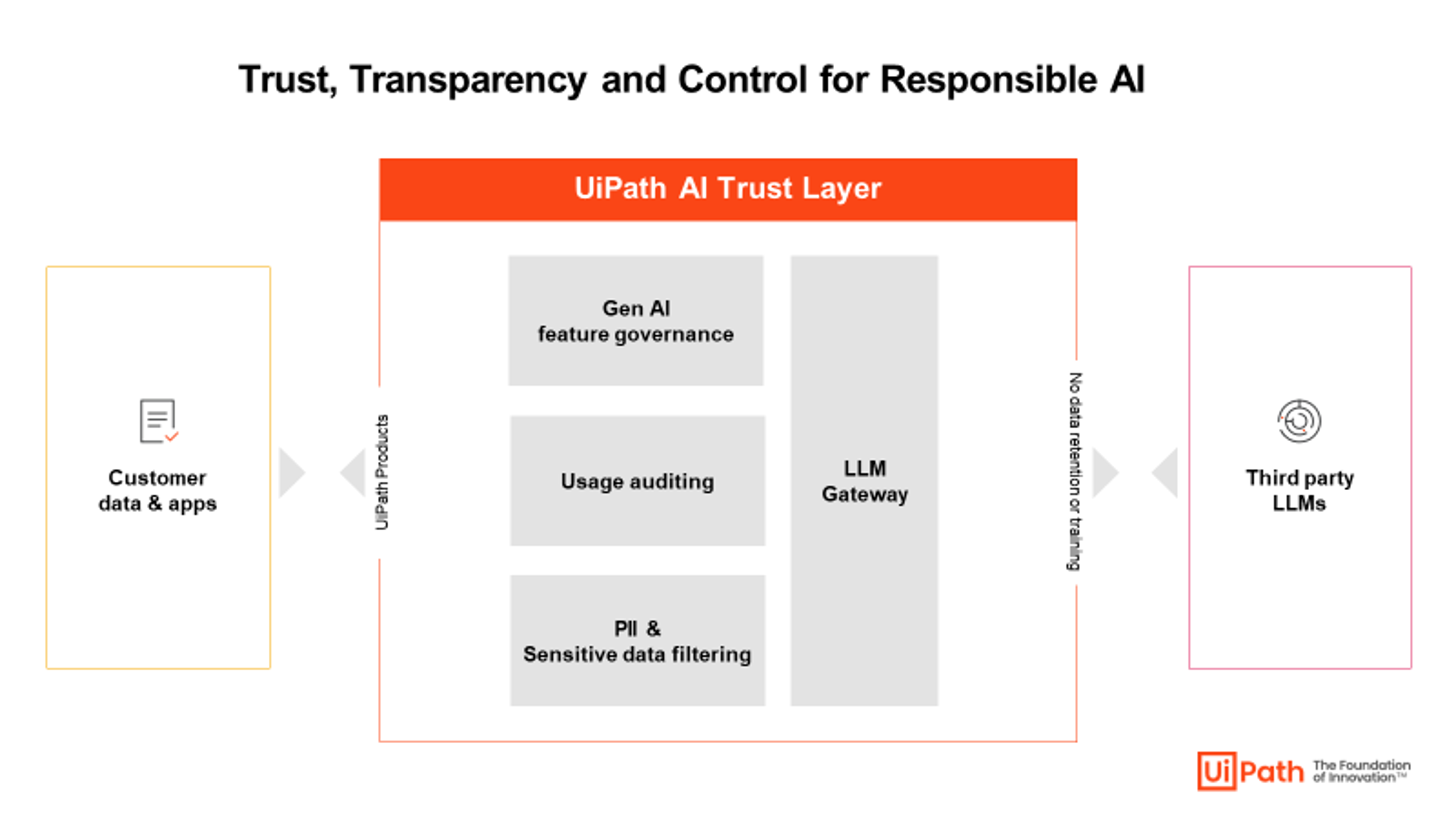

That brings us to the upcoming availability of the UiPath AI Trust Layer which will enhance our enterprise data privacy policies and Responsible AI principles with additional software-defined governance to help customers responsibly scale their automation ambitions with Generative AI.

The UiPath AI Trust Layer has the following foundational principles:

Trust

UiPath treats all data that interacts with UiPath products and third-party LLMs with the highest level of integrity, security, and privacy. Knowing the audit trail of who, when, and which data were used by Generative AI features is crucial to maintain ethical and compliance standards.

Transparency

UiPath customers should have complete visibility into the use of Generative AI features, models, and the transfer of data from the UiPath Platform to third-party models. Dashboard audit and cost control tools, paired with zero data sharing or storage outside customer-managed UiPath tenants, will scale the value of responsible AI-powered automation.

Control

UiPath customers should have administrative control over the mitigation of sensitive and harmful data that could be sent to LLMs and role-based policies to manage appropriate developer access to Generative AI features.

The UiPath AI Trust Layer will allow contextualized customer data, and customer interactions with UiPath products, to securely flow to trusted third-party LLMs. It will deliver the following features:

Generative AI feature controls: administrative controls for all Generative AI feature access, and usage at an organization and tenant level, with default opt-out training policies for third-party models.

PII/sensitive data filtering: personally identifiable information (PII) is masked, data is encrypted at rest (AES-256) and in transit (TLS 1.2), and is not used to improve third-party Generative AI models.

Usage auditing and cost control: visibility into Generative AI feature costs and usage distribution through UiPath managed Generative AI models as well as your connected models.

LLM gateway: a centralized and secure proxy service in which all customer data sent to LLMs is confidential, load-balanced, and adheres to defined data governance policies.

The UiPath AI Trust Layer ensures all automation users can use the generative AI-powered capabilities of the platform with confidence knowing they are governed by company data and personnel policies.

Developers can build automations with AI models and use new AI powered experiences like UiPath Autopilot for Studio to develop automations faster than ever before. They will realize faster time to value with UiPath Document Understanding and Communications Mining using new Generative AI features related to the classification, annotation, and extraction of information from messages and documents. These new experiences dramatically improve productivity and accelerate automation deployment from weeks to days.

Business users will now be able to leverage their business expertise, access to shared prompts and embeddings, and a suite of prebuilt applications such as UiPath Autopilot for Assistant to realize the full value of automation.

Centers of excellence leads and administrators will have the ability to make all this happen easily with rich policies to Audit LLM usage, manage metering and LLM costs and govern LLM data privacy and input/prediction output.

We are confident the UiPath AI Trust Layer will accelerate the adoption of responsible, AI-powered automation for everyone.

Join the UiPath Insider program to get early access to early builds of new features and updates.

The above is intended to outline our general product direction. It is intended for information purposes only and may not be incorporated into any contract.

It is not a commitment to deliver any material, code, or functionality, and should not be relied upon in making purchasing decisions.

The development, release, and timing of any features or functionality described for UiPath’s products remains at the sole discretion of UiPath.

Senior Manager, Product Management, UiPath

Get articles from automation experts in your inbox

SubscribeGet articles from automation experts in your inbox

Sign up today and we'll email you the newest articles every week.

Thank you for subscribing!

Thank you for subscribing! Each week, we'll send the best automation blog posts straight to your inbox.