RPA: Paving Big Data’s Unstructured Last Mile

In the context of Big Data, the last mile refers to collecting the final pockets of enterprise information so that data capture is, for all intents and purposes, comprehensive. Unless the last mile is completed, Big Data doesn’t truly live up to its name; analytics ca

n’t realize its full potential and reporting is hard pressed to meet management expectations. For all the hype and attention around data, analytics and advanced reporting, it’s tempting to assume addressing and managing this key last mile issue has been taken care of. In fact, that’s not the case. Integrating isolated pools of company information into enterprise data capture has remained a difficult challenge with no easy solution – until now. Robotic process automation (RPA) technology, particularly in light of recent innovations, has the unique combination of features, capabilities, and benefits to complete the last mile quickly and effectively.

Big Data’s Last Mile Challenge

The prerequisites for effective data capture are a common data model and centralized repositories. Of course, having centralized, standardized data also provides tremendous operational benefits – so companies have been striving towards that end for decades. Three major technologies: ERP, CRM and BPMS are all based upon those attributes, so the increasing adoption of those systems set the stage for today’s awareness and focus on data analytics. Unfortunately, significant obstacles remained.

One of those obstacles was the lingering presence of legacy systems. ERP, the first of the three technologies to emerge in the 90’s, delivered on its promise to deliver supply chain efficiencies through common data and standardized processes. However, the early generations gained a reputation for being time-consuming to deploy and expensive to customize during implementation. Since the alternative to customizing the software was to standardize operation processes, users pushed back and legacy systems quickly found legions of business champions.

Over time, ERP systems became more nimble and more specialized for industries and sub-industries. This led to increasingly larger implementation footprints and a bigger share of enterprise data and processes; all changes which were favorable to data capture and management. What these technological innovations could not address was the continual and significant level of mergers and acquisitions, all of which projected the winner’s technical & data architecture would absorb the acquired architecture. Unfortunately, most of the time that turned out not to be the case: “M&A failures are between 40-80 percent — with an inability to integrate systems one of the leading causes.” The result of these failed integrations was a corresponding failure to advance common enterprise data.

However the greatest limitation of ERP systems in the quest for common data is the law of diminishing returns. At a certain deployment point the cost of implementing the technology is no longer justified by the benefits it produces.

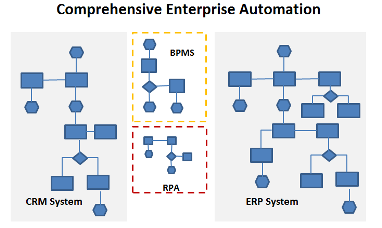

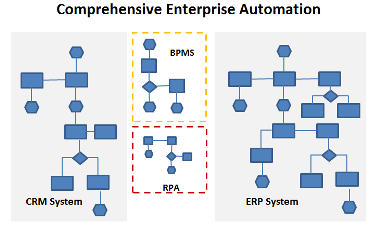

That immutable law led to the emergence of business process management systems (BPMS). This software incorporated a rules engine and data repository to enable companies to automate smaller scale business processes than could be reasonably handled by an ERP system. Another ERP constraint, lengthy customization timelines, led many companies to deploy customer relationship management systems (CRM) for focused and flexible automation of their sales and marketing processes. By leveraging three technologies, companies are able to able to maximize their automation footprint, increase operational efficiencies and integrate data between the systems.

The Last Mile is Paved with Unstructured Data

Yet even the larger automation footprint and three data repositories created by these systems did not eliminate the last mile of data. Again, the culprit was the law of diminishing returns. Even though BPMS requires a far less expensive investment than ERP, there is a point at which it makes no sense to use it in order to automate a small process. While these small manual processes are marginal in and of themselves, in the aggregate they represent significant operational costs and generate significant pools of isolated data.

These manual processes are a sweet spot for robotic process automation. Combining visual, intuitive, process modeling with presentation layer integration, RPA has a proven track record for quickly automating these processes, generating significant savings and enabling much higher performance levels and accuracy.

Recent innovations by leading vendors have brought enterprise capabilities to this software, along with an API which allows its robots to be integrated with other automation systems. By leveraging these enterprise features, a company can complete the final mile and capture both structured and unstructured data by creating an integrated automation footprint with ERP, CRM, BPMS and RPA. If such an integrated solution is not an immediate possibility, robotic software can still complete the final mile by pooling unstructured data in a specified location and in a standard format.

Typically, unstructured data is found in spreadsheets, PDFs, emails and scanned documents. This last mile data remains outside data capture because of the time required to clean, enrich and combine this information. Typically, groups of people use Excel to do this work, which can take days. However, because the most innovative robots are automated with .NET capabilities & VB expressions and functions, they can create, filter, merge, structure and analyze this isolated data. The end result is manual capture work that takes days is now accomplished in minutes.

Completing this last mile releases significant enterprise benefits. The most immediate is the enrichment and improved accuracy of management reporting. Without having to cover isolated data pockets with either assumptions or wait for manual capture, reports can now be timely and accurate. The full value of Big Data is achievable because large amounts of external data can now be integrated with high quality internal data to confidently drive decision-making with associated analytics. Finally, the company has the data quality levels necessary to begin pursuing revenues from data monetization, a tactic which will offset its own external data purchases.

Strategic Advisor, Tquila Automation

Get articles from automation experts in your inbox

SubscribeGet articles from automation experts in your inbox

Sign up today and we'll email you the newest articles every week.

Thank you for subscribing!

Thank you for subscribing! Each week, we'll send the best automation blog posts straight to your inbox.